Machine learning software has become a big deal in the past few years.

PyTorch is one of the popular machine learning tools that can help make things happen fast using Python programming language inside Intel CPUs or NVIDIA GPU accelerated platforms such as TensorFlow Lite.

This post will provide complete details about PyTorch. The post will disclose what it is, its installation, its features, how it is beneficial, and the drawbacks that make you think about choosing a better one.

What is PyTorch?

It is a machine-learning framework that is based on Torch. It was used for computer vision (AI) and natural language processing applications.

It is free and open-source software released under the modified BSD license. It is developed by Meta AI and is now part of the Linux Foundation Umbrella.

Pricing

The vendor does not provide pricing details; contact them directly.

How to install PyTorch?

You must pre-plan your preferences, choose the options you want, and run the install command. “Stable” represents the most tested and supported version of it.

Make sure you have met the prerequisites below before installing this package, depending on your package manager.

Prerequisites

- Supported Windows distributions: Windows 7 and greater, Windows 10 and greater, Windows Server 2008, r2 and greater

- Python: PyTorch on Windows only supports Python 3.7-3.9; Python 2. x is not supported.

Some more options to install

- Chocolatey

- Python website

- Anaconda

If you use Anaconda to install this, it will install a copy of Python that will be used for running PyTorch applications.

Package manager

You can install the PyTorch binaries using two different package managers: Anaconda or Pip. Anaconda is recommended package manager install it using a “64-bit graphical installer”.

Installation

Anaconda

If you are planning to install PyTorch by Anaconda, you need to open the Anaconda prompt via

- Start

- Anaconda 3

- Anaconda prompt

No CUDA

If you want to install this ML using Anaconda and don’t have a computer that can use CUDA, choose the following.

- OS: Windows

- Package: Conda, CUDA.

With CUDA

If you want to install this using Anaconda and have a computer that can use CUDA, select

- OS: Windows

- Package: Conda and the CUDA version that is right for your machine.

PIP

If you don’t have a CUDA-capable system or don’t need CUDA, on the selector, choose

- OS: Windows

- Package: Pip and CUDA

Then, run the command that is shown.

No CUDA

If you want to install this using Pip, and you do not have a CUDA-capable system, or you do not need CUDA, choose

- OS: Windows

- Package: Pip and CUDA

With CUDA

If you want to install this using Pip, ensure your computer has a CUDA-capable system. You can choose the OS and Package on the selector. The best CUDA version for your machine might be the latest one.

And as the last step, verify the installation by running a sample PyTorch code.

Features

Production Ready

It makes it easy to use TorchScript in eager mode while still providing the speed and functionality you need when running TorchScript in a C++ runtime environment.

TorchServe

It is a service that supports features such as multi-model serving, logging, and metrics. It also enables application integration with robust endpoints for developers to use in their coding efforts – no matter what programming language they speak!

Distributed Training

The native support for asynchronous execution and peer-to-peer communication in Python and C++ makes it possible to take advantage of collective operations, which can speed up research or production by orders.

It also provides an easy way to communicate with fellow workers on your team without having everyone constantly consult a shared document.

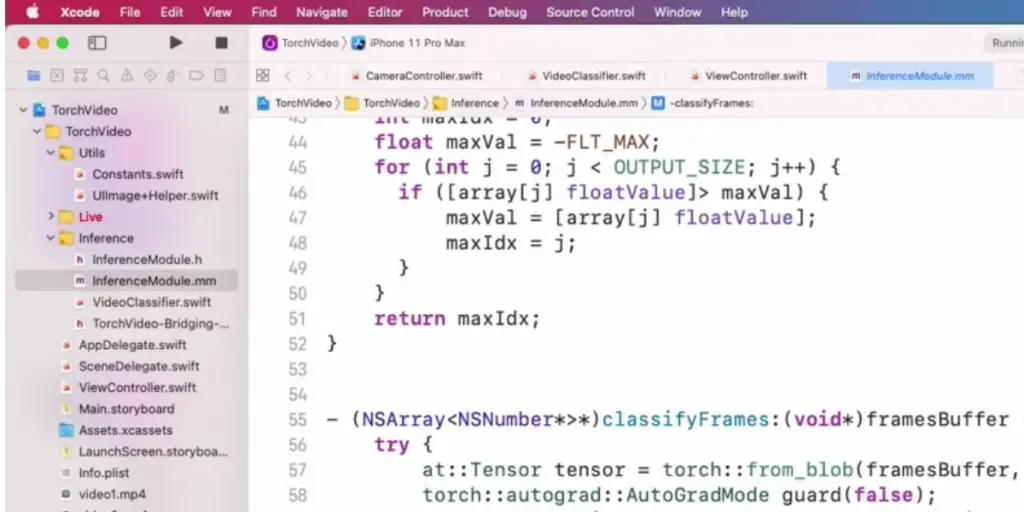

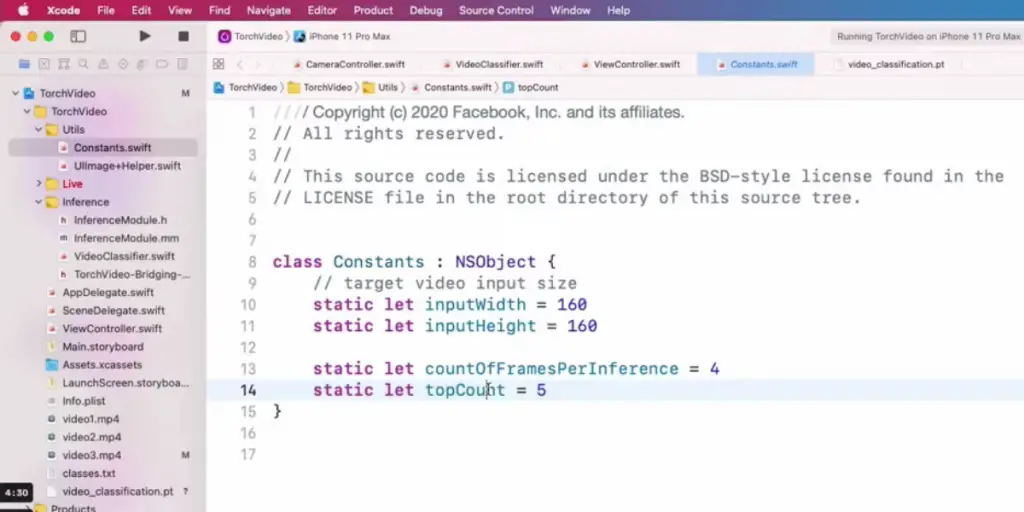

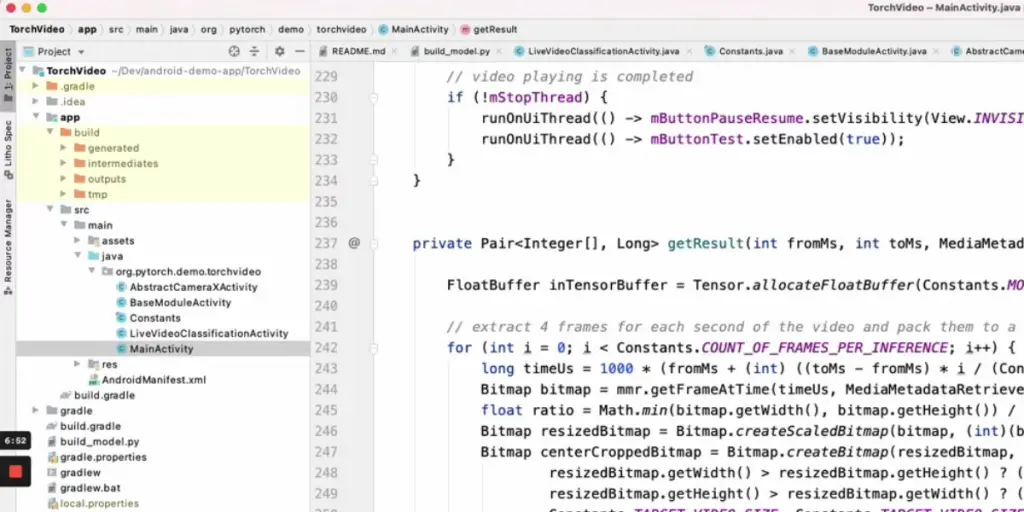

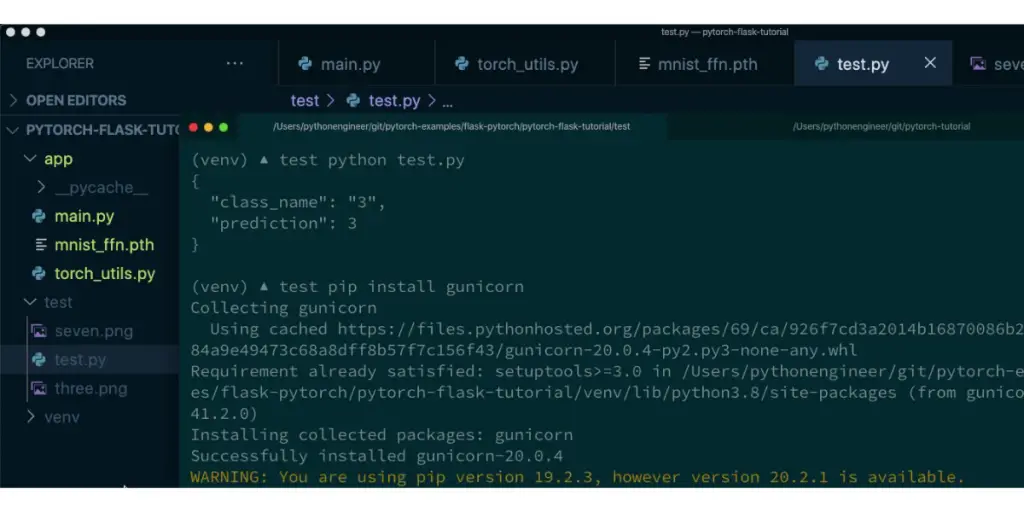

Mobile Applications

This enables developers to create state-of-the-art mobile applications with ease. In addition, it provides a robust workflow that extends PyTorch’s API, allowing you to incorporate machine learning into your application.

Ecosystem

The PyTorch scientific computing and deep learning library have developed into an active community of researchers and developers who build tools for extending its functionality.

Open Neural Network Exchange ( ONNX) Supports

The export models are in the standard ONNX format for direct access to various platforms, run times, and visualizers.

C++ Front-End

C++ front is a way to use PyTorch in C++. It is designed to be like the Python front end. That will help research high-performance, low latency, and bare metal C++ applications.

Supports Cloud Platforms

It is supported on major cloud platforms, simplifying the development and scale of your applications.

Like Amazon Web Services, Google Cloud Platform, and Microsoft Azure, it also supports large-scale training on GPUs, so you can get the performance you need for your applications.

Some screenshots of the PyTorch feature

Other information

| Platform | X86-32(36bit intel into 86), x86-64 |

| Programming Languages | C++, Python, CUDA |

| License | BSD-3 |

Advantages

- You can use Python and C++ programming languages, allowing a moderate learning curve.

- It is developer-friendly and faster

- Free tutorials are available

- Easy computation among multiple CPUs

- Provide dynamic graph

Disadvantages

- It is not suitable to train a small amount of data

- Its support on error part is unavailable over the internet and official documentation.

- You may face problems during scaling.

- Because of scalability issues, the development and integration of the application are difficult.

- No support for developers in the community to solve the problem

- Lack of visualization support loke TensorBoard

- Debugging is a critical issue

Alternatives

- TensorFlow

- Scikit Learn

- Personalizer

- Google Cloud TPU

- Simple AI

- Keras

- Oracle machine learning

- Azur Machine learning

- Amazon Deep Learning AMIs

- IBM Watson Machine Learning

Conclusion

This post has given you complete details about PyTorch machine learning. You now know about its installation, features, and advantages. But you should also consider its disadvantages before deciding if it is the best ML software.

Reference